Friday, March 30, 2007

Tragically, I've gone deaf

I went to the Tragically Hip's concert at Moore theatre in Seattle tonight.

It was a really, really great show and the audience was definitely doing its best to match Gord Downie's energy level.

Also, you couldn't escape the feeling that everyone in the crowd was an expat. In every cluster of four or more people, there'd be one with a maple leaf, or a hockey jersey, or something.

Oh, and while waiting for an encore, the audience broke into a loud O Canada. I think tonight was the first time I've actually heard an anthem truly being sung -- you could practically feel the groupthink in the room.

It was a really, really great show and the audience was definitely doing its best to match Gord Downie's energy level.

Also, you couldn't escape the feeling that everyone in the crowd was an expat. In every cluster of four or more people, there'd be one with a maple leaf, or a hockey jersey, or something.

Oh, and while waiting for an encore, the audience broke into a loud O Canada. I think tonight was the first time I've actually heard an anthem truly being sung -- you could practically feel the groupthink in the room.

Wednesday, March 28, 2007

Metapedia

Wikipedia's a great resource. Usually, it's a good starting point for research. And the bits it's missing are usually called out with "stub templates".

I never realized how childish I was until, the other day, when Sarah was researching phalloplasty, I burst out laughing. Ah, metahumour. The best kind.

I never realized how childish I was until, the other day, when Sarah was researching phalloplasty, I burst out laughing. Ah, metahumour. The best kind.

Sunday, March 25, 2007

Cohen on Howard: Final Thoughts

So, there you have it.

Is that the state of discussion in the software engineering community? Cohen's writing is frequently hindered by poor logic and unchecked facts. There seemed to have been more than a few ad hominem attacks on the authors simply because they worked for Microsoft.

In my view, there were two good criticisms to come from his review:

1 - the book is poorly organized

2 - the section on input checking proposes regexes as the be-all, end-all for input validation

It seems to me that could have been said much more succinctly than 5,400 words. Perhaps a chunk of the words freed up by being more direct could have been used to educate others on the areas he felt were poorly treated.

Disappointing.

If I were the person at Microsoft responsible for allowing books to be released I would not have approved the book by Michael Howard and David LeBlanc.

In essence, this is a book about how Microsoft has screwed up security in their programming practices over the years and how they are trying to fix it.

It also implies accountability, which is, as far as I can tell, a new thing at Microsoft in terms of software development.

That's right, Microsoft does not want backward compatability, but we all knew that a long time ago.

While this is a good business model, it is a poor information protection approach. Which may be the real reason that Microsoft does so poorly in this arena. They tell us that the most valuable part of a bank is its vault - did they miss the information age somewhere? They probably never worked on bank security.

The book is almost 800 pages, by the way, and it does have a solid 40 pages of worthwhile content, not a bad bloat ratio for some software products.

This is consistent with their expressed view of applying theory where appropriate - that you should never do so. That's part of why they will continue to make big stupid mistakes from time to time.

[...] but careful is not a word I would attribute to the authors of this book in their writing style. They approach the issues with reckless abandon, and that's entertaining at a minimum.

In Part 3, my hopes were dashed. Yes, part 2 continues in part 3. The separation is apparently only a trick to meet an administrative requirement of maximum section sizes, or perhaps a limitation of Word based on an integer overrun.

I think that the lack of time and attention to the underlying issues, the lack of organization and models, and the inconsistencies and poor advice are all related to spending too little time thinking through the issues and organization of the book. This is reflective of the same corporate culture that led to the problems with security at Microsoft and in other software vendors.

A better name might be something like: "How poor quality programmers at Microsoft have produced hundreds of instances of the same 10 big mistakes in their code, and how they can do their jobs a little bit better".

Is that the state of discussion in the software engineering community? Cohen's writing is frequently hindered by poor logic and unchecked facts. There seemed to have been more than a few ad hominem attacks on the authors simply because they worked for Microsoft.

In my view, there were two good criticisms to come from his review:

1 - the book is poorly organized

2 - the section on input checking proposes regexes as the be-all, end-all for input validation

It seems to me that could have been said much more succinctly than 5,400 words. Perhaps a chunk of the words freed up by being more direct could have been used to educate others on the areas he felt were poorly treated.

Disappointing.

Cohen on Howard: Chapter 24

Chapter 24 covers writing security documentation and error messages.

Cohen:

This is nitpicking on the part of Cohen. Yes, security through obscurity is a doomed tactic. That doesn't mean attackers shouldn't have to work for every bit of information. Why should we reduce the attackspace for them by providing them with useful information?

Cohen:

Cohen seems to not only have missed the thrust of this part of the book, but also seems to be labouring under the false impression that Windows passwords are case-insensitive.

This section of the chapter is trying to say that error messages should empower legitimate users to solve problems while not giving away useful attack information. For example, rather than saying "Your password is incorrect." you can say "Your password is incorrect. Remember, passwords are case sensitive." to hint that the user needs to pay extra attention. It does not in any way propose that you say "Your password is incorrect. However, if you uppercased the 3rd letter, it would be correct."

Cohen's line of reasoning would have made more sense had he complained that they told the user the password was wrong -- thus implying that the username was correct and reducing the attacker's work by several orders of magnitude. But he seems to have missed that.

Cohen:

But the problems in this chapter start early. On the second page they tell us not to use security through obscurity, after having told us in the prior chapter not to tell attackers anything.

This is nitpicking on the part of Cohen. Yes, security through obscurity is a doomed tactic. That doesn't mean attackers shouldn't have to work for every bit of information. Why should we reduce the attackspace for them by providing them with useful information?

Cohen:

A few pages later, they tell us to not reveal anything sensitive in error messages, then give us what they think is a good example of telling the attacker that the password they just tried was wrong only because some of the characters were in the wrong case. Of course this eliminates the value of using case sensitive passwords in the first place, telling the attacker a great deal of useful information by reducing the search space by several orders of magnitude, but they seem to have missed that.

Cohen seems to not only have missed the thrust of this part of the book, but also seems to be labouring under the false impression that Windows passwords are case-insensitive.

This section of the chapter is trying to say that error messages should empower legitimate users to solve problems while not giving away useful attack information. For example, rather than saying "Your password is incorrect." you can say "Your password is incorrect. Remember, passwords are case sensitive." to hint that the user needs to pay extra attention. It does not in any way propose that you say "Your password is incorrect. However, if you uppercased the 3rd letter, it would be correct."

Cohen's line of reasoning would have made more sense had he complained that they told the user the password was wrong -- thus implying that the username was correct and reducing the attacker's work by several orders of magnitude. But he seems to have missed that.

Cohen on Howard: Chapter 23

Chapter 23 is a grab bag of good practices.

In general, Cohen's review of this chapter is fair-minded. He criticizes the grab bag approach as indicative of the book's overall lack of organization. Seems fair: Chapter 23 is the "General Good Practices" chapter in "Part IV: Special Topics" (not to be confused with "Part II: Secure Coding Techniques" or "Part III: Even More Secure Coding Techniques").

One thing I must point out from having worked on Microsoft CardSpace is the following line in Cohen's review:

Microsoft CardSpace works on the WS-* protocols, which are open, freely licensed, and jointly developed by many contributors.

In general, Cohen's review of this chapter is fair-minded. He criticizes the grab bag approach as indicative of the book's overall lack of organization. Seems fair: Chapter 23 is the "General Good Practices" chapter in "Part IV: Special Topics" (not to be confused with "Part II: Secure Coding Techniques" or "Part III: Even More Secure Coding Techniques").

One thing I must point out from having worked on Microsoft CardSpace is the following line in Cohen's review:

They are right, we should have and use standards that allow representation to be product independent, but of course Microsoft is the company that brings you proprietary versions of everything to keep you from buying other vendor products.

Microsoft CardSpace works on the WS-* protocols, which are open, freely licensed, and jointly developed by many contributors.

Cohen on Howard: Chapter 22

Chapter 22 covers privacy legislation and concerns that need to be addressed by secure software.

Cohen:

Chapter 22 outlines US and EU privacy legislation (EU directives on Data Protection, Computer Fraud and Abuse Act, Gramm-Leach Bliley Act, Health Information Portability Accountability Act, and the Children's Online Privacy Protection Act) that might make certain demands on your software.

It discusses privacy policies for software and websites and how to integrate with the Platform for Privacy Preferences standards. It warns about making bold statements about privacy that you can't back up because of trust issues with business partners or business processes.

It is hard to know exactly what Cohen takes offense at in this chapter, but his review seems unjustified.

Cohen:

Chapter 22 is about legal issues in privacy, but it doesn't even do that well. All it really does is pile more mindless data on the reader without the context to apply it well.

Chapter 22 outlines US and EU privacy legislation (EU directives on Data Protection, Computer Fraud and Abuse Act, Gramm-Leach Bliley Act, Health Information Portability Accountability Act, and the Children's Online Privacy Protection Act) that might make certain demands on your software.

It discusses privacy policies for software and websites and how to integrate with the Platform for Privacy Preferences standards. It warns about making bold statements about privacy that you can't back up because of trust issues with business partners or business processes.

It is hard to know exactly what Cohen takes offense at in this chapter, but his review seems unjustified.

Cohen on Howard: Chapter 21

Chapter 21 covers securing the software installation experience.

Cohen:

Again, Cohen doesn't offer a source for this statement. I don't believe the chapter said this.

Cohen:

The chapter doesn't advocate this, either. Instead, it points out that registry can be a nicer choice than a file since it offers fine-grained access control, per-value, whereas files can only offer security per-file.

Cohen:

Then comes the really hokey advice. Like that systems administrators should be able to alter application programs. Really? Since when should users who are the "administrators" on their computers be able to alter the binary code of an executable from a vendor?

Again, Cohen doesn't offer a source for this statement. I don't believe the chapter said this.

Cohen:

And why should we put keys to codes in the system-wide registry file instead of a file that is protected from read by others?

The chapter doesn't advocate this, either. Instead, it points out that registry can be a nicer choice than a file since it offers fine-grained access control, per-value, whereas files can only offer security per-file.

Cohen on Howard: Chapter 20

Chapter 20 covers performing a security review, mostly via code reviews.

Cohen doesn't have much to say about Chapter 20 -- which is fair, given that the chapter amounts to only 13 pages.

Cohen doesn't have much to say about Chapter 20 -- which is fair, given that the chapter amounts to only 13 pages.

Cohen on Howard: Chapter 19

Chapter 19 covers penetration testing, including fuzzing techniques.

Cohen grudgingly accepts this chapter, noting that "finally, they start to begin to put a model on the security issue". Is he perhaps referring to the in depth descriptions of the STRIDE technique, first presented back in Chapter 4?

Cohen grudgingly accepts this chapter, noting that "finally, they start to begin to put a model on the security issue". Is he perhaps referring to the in depth descriptions of the STRIDE technique, first presented back in Chapter 4?

Cohen on Howard: Chapter 18

Chapter 18 focuses on security features built into the .NET common language runtime.

Cohen:

Unfortunately, Cohen's review of this chapter is light on specifics but generally pans the chapter.

Cohen:

In chapter 18 we are told how to write secure .NET code, but of course it ignores all of the previous lessons and tells us to use security features that extend trust from domain to domain without a good basis. Ah well, what should I have expected from the last chapter in this section. They would have done better to cut this whole section out.

Unfortunately, Cohen's review of this chapter is light on specifics but generally pans the chapter.

Cohen on Howard: Chapter 17

Chapter 17 covers preventing denial of service attacks against common resources.

Cohen:

In the context of the quote, it is clear that they're discussing how to improve CPU performance issues on a specific codebase, not how to detect and fix CPU denial of service attacks. Asymptotic algorithm complexity analysis is costly and leaves out a lot of context that is needed when determining how to improve performance (e.g., how often is this code path executed). The pragmatic programmer in me has no problem with using a profiler against a system running under a broad set of expected loads to figure out where the low-hanging fruit is.

Cohen:

It also gives us a really bad example of using software performance profiling instead of complexity analysis to find possible denial of service exploits. This is the worst example yet of ignoring academic results in favor of inferior industry methods. In particular, a junior programmer is told to ignore all that complexity theory he was taught in the University and simply test each of the routines under different inputs, find the slow routines, and speed them up. Of course in a denial of service scenario, if there is a high complexity function that is fast in almost all cases, a good attacker will find the worst case input sequences and exploit them while the testing scheme will almost certainly miss these cases unless they do complexity analysis.

In the context of the quote, it is clear that they're discussing how to improve CPU performance issues on a specific codebase, not how to detect and fix CPU denial of service attacks. Asymptotic algorithm complexity analysis is costly and leaves out a lot of context that is needed when determining how to improve performance (e.g., how often is this code path executed). The pragmatic programmer in me has no problem with using a profiler against a system running under a broad set of expected loads to figure out where the low-hanging fruit is.

Cohen on Howard: Chapter 16

Chapter 16 covers securing RPC, ActiveX and DCOM code.

Cohen:

It's unclear what Kerberos code Cohen is referring to -- perhaps the flag which specifies using Kerberos as the authentication method for RPC?

The chapter presents a number of useful flags when programming RPC code and details the trade-offs of each choice.

As well, the chapter presents information on disabling previously-released ActiveX code with security flaws.

Cohen:

Chapter 16 tells us 50 variables to set to specific values in RPC and Kerberos code (why they don't set these by default I don't know, but expecting Microsoft to do what the authors advise is expecting too much)

It's unclear what Kerberos code Cohen is referring to -- perhaps the flag which specifies using Kerberos as the authentication method for RPC?

The chapter presents a number of useful flags when programming RPC code and details the trade-offs of each choice.

As well, the chapter presents information on disabling previously-released ActiveX code with security flaws.

Cohen on Howard: Chapter 15

Chapter 15 covers security for networks.

Cohen:

Cohen's joke is especially funny when you realize that David LeBlanc, a co-author of the book, produced the SafeInt library to prevent integer overflow attacks...while working for Microsoft Office.

Cohen:

Cohen's review does a poor job of describing what is missing here. The chapter covers how to prevent local applications from hijacking a server's port, limiting attack surface by binding as narrowly as possible to an interface and the insecurities of DNS as well as how to write firewall-friendly applications, amongst other things.

Cohen:

In Part 3, my hopes were dashed. Yes, part 2 continues in part 3. The separation is apparently only a trick to meet an administrative requirement of maximum section sizes, or perhaps a limitation of Word based on an integer overrun.

Cohen's joke is especially funny when you realize that David LeBlanc, a co-author of the book, produced the SafeInt library to prevent integer overflow attacks...while working for Microsoft Office.

Cohen:

Chapter 15 does a poor job of handling network issues with the exception of providing some reasonable advice on building firewall-friendly applications.

Cohen's review does a poor job of describing what is missing here. The chapter covers how to prevent local applications from hijacking a server's port, limiting attack surface by binding as narrowly as possible to an interface and the insecurities of DNS as well as how to write firewall-friendly applications, amongst other things.

Cohen on Howard: Chapters 12 to 14

Chapters 12 to 14 deal with more canonicalization issues: database input, web input and internationalization via Unicode.

Cohen:

I dispute that these sections are not useful: they cover real-world attacks and tell you how to prevent them. This is much more actionable than saying "input validation and canonicalization issues exist in the web and database environments as well."

Cohen:

If you accept that canonicalization is a security issue, how is internationalization canonicalization not a security issue?

Cohen:

Chapters 12 and 13 are the same thing as chapter 11, repeated in the context of databases and web servers. In other words, they only give more examples of the same mistakes producing the same sorts of errors in different application environments. Useful for those who didn't get it the first 10 times, redundant for the rest of us.

I dispute that these sections are not useful: they cover real-world attacks and tell you how to prevent them. This is much more actionable than saying "input validation and canonicalization issues exist in the web and database environments as well."

Cohen:

Finally, thankfully, chapter 14 tells us to use Unicode for representing everything. Of course this is based on internationalization issues, not security issues, and ends this section of the book. After 325 pages, I found myself wanting more for less.

If you accept that canonicalization is a security issue, how is internationalization canonicalization not a security issue?

Cohen on Howard: Chapter 11

Chapter 11 addresses the issue of input validity given canonical representation issues. e.g., http://127.1 is equivalent to http://127.0.0.1 and to http://2130706433

Again, Cohen's comments are accurate. Formal languages and well-defined standard representations would solve the problem; meanwhile, the book presents best practices and suggestions for how to canonicalize input.

Again, Cohen's comments are accurate. Formal languages and well-defined standard representations would solve the problem; meanwhile, the book presents best practices and suggestions for how to canonicalize input.

Cohen on Howard: Chapter 10

Chapter 10 presents issues in untrusted input and methods to check input for validity and safety.

Cohen:

Spot on. There are more advanced techniques that are better than what the book presents. One could nitpick that "they just missed the basic notion that we are dealing with sequential machines" and instead chose to present simpler techniques that are within the grasps of all computer programmers.

Cohen:

You may not believe this, but the book fails to address sequential machine issues across the board and focuses entirely on combinatorics issues under stateless machine assumptions. This is not by intent, as there is no underlying model in the book. They just missed the basic notion that we are dealing with sequential machines. And of course asynchronous issues between communicating sequential machines never even hits their radar. We are told to check input validity by verifying syntax, but the use of redundant values on input to cross check validity is ignored. Input syntax is addressed, but semantics are ignored, and more particularly, we are not told how to build syntax filters that allow different syntactic elements based on previous inputs and program states.

Spot on. There are more advanced techniques that are better than what the book presents. One could nitpick that "they just missed the basic notion that we are dealing with sequential machines" and instead chose to present simpler techniques that are within the grasps of all computer programmers.

Cohen on Howard: Chapter 9

Chapter 9 deals with encryption and protecting secret data.

Cohen largely approves of Chapter 9. In particular, he likes the fact that the authors state knowledge of cryptography and mathematics is not sufficient: you must apply it in a way that makes sense. (For a way that doesn't make sense, see the picture to the right.)

Cohen largely approves of Chapter 9. In particular, he likes the fact that the authors state knowledge of cryptography and mathematics is not sufficient: you must apply it in a way that makes sense. (For a way that doesn't make sense, see the picture to the right.)

Howard:

Cohen:

Yes -- if you add CPU, user and I/O time it will come close to 100%. However, the authors make it clear that they concatenate the values rather than sum then. Even so, due to issues with representing numbers in computer systems, CPU + user + I/O time may not always sum to 100 depending on Microsoft's internal representation.

Cohen:

Cohen does not indicate which Microsoft product is storing passwords unsalted. Doubtless, many earlier Microsoft products had this problem before it was well understood to be a problem. Indeed -- many Linux distributions of a bygone time didn't shadow their passwd files, even though /etc/passwd was world-readable and had a small saltspace. Even today, Wordpress doesn't salt user passwords -- and uses the insecure MD5 hash to boot!

Cohen largely approves of Chapter 9. In particular, he likes the fact that the authors state knowledge of cryptography and mathematics is not sufficient: you must apply it in a way that makes sense. (For a way that doesn't make sense, see the picture to the right.)

Cohen largely approves of Chapter 9. In particular, he likes the fact that the authors state knowledge of cryptography and mathematics is not sufficient: you must apply it in a way that makes sense. (For a way that doesn't make sense, see the picture to the right.)Howard:

CryptGenRandom gets its randomness, also known as system entropy, from many sources in Windows 2000 and later, including the following: [...lists on the order of 100 counters...]

The resulting byte stream is hashed with SHA-1 to produce a 20-byte seed value that is used to generate random numbers according to FIPS 186-2 appendix 3.1.

Cohen:

The authors rightly spend a substantial amount of time on generating decent pseudo-random numbers [...] Of course their solution is a Microsoft system call that they assert does it right. I should note that the parameters they claim to use to generate their random seeds contain some very predictable values and sets of values that, while individually may not be very predictable may be more predictable together. For example, when we add the CPU, User, and I/O time it may come to a predictable value (100%) even if each is not very predictable on their own.

Yes -- if you add CPU, user and I/O time it will come close to 100%. However, the authors make it clear that they concatenate the values rather than sum then. Even so, due to issues with representing numbers in computer systems, CPU + user + I/O time may not always sum to 100 depending on Microsoft's internal representation.

Cohen:

They do tell us to use salts for hash functions to store things like passwords. Their inability to get the job done at Microsoft shines through when we realize that the password scheme used in Microsoft products didn't use salts for their hashes and resulted in a widely published dictionary-based attack based on this weakness. It saddens me to see that even when the authors get it right the company gets it wrong.

Cohen does not indicate which Microsoft product is storing passwords unsalted. Doubtless, many earlier Microsoft products had this problem before it was well understood to be a problem. Indeed -- many Linux distributions of a bygone time didn't shadow their passwd files, even though /etc/passwd was world-readable and had a small saltspace. Even today, Wordpress doesn't salt user passwords -- and uses the insecure MD5 hash to boot!

Cohen on Howard: Chapters 5 to 8

Chapters 5 to 8 cover buffer overruns, access control lists, least privileges, and basic cryptography errors.

Cohen's review of chapters 5 to 8 is lumped together into 3 paragraphs, ending with:

While some of the code samples are definitely very Windows-specific (e.g., how to modify your execution token to restrict your operating permissions so as to restrict the value of exploiting your program), some of the samples and concepts are technology agnostic and very important.

Chapter 5, for example, focuses on buffer overruns and their ilk. These constantly feature on the SANS Institute's Annual Top 20 Security Attack Targets list.

Cohen's review of chapters 5 to 8 is lumped together into 3 paragraphs, ending with:

If you don't know why C leads to off-by-one errors that lead to storage errors that lead to programs doing bad things, these chapters are worth reading. If you like examples without all the facts to make the point, but lots of lines of code showing how to set access controls in Windows, this section of the book is for you. It is not for the same people that section 1 was for, but the audience shift should be obvious enough for most readers to ignore one part or the other appropriately. My summary note on these chapters says "Bad design + bad programmers => Bad code". I think that is telling.

While some of the code samples are definitely very Windows-specific (e.g., how to modify your execution token to restrict your operating permissions so as to restrict the value of exploiting your program), some of the samples and concepts are technology agnostic and very important.

Chapter 5, for example, focuses on buffer overruns and their ilk. These constantly feature on the SANS Institute's Annual Top 20 Security Attack Targets list.

Cohen on Howard: Chapter 4

Chapter 4 is on modelling threats to software by breaking it down into components and analyzing each part's vulnerabilities.

Chapter 4 introduces modelling techniques for decomposing your applications, including:

Cohen:

Cohen seems to suggest that STRIDE and DREAD are inferior modelling techniques. Inferior to what? He proposes no alternative.

Cohen:

It is unclear to me how a chapter explaining how to do basic modelling consistently and completely in a systematic fashion supports this view.

Cohen:

I don't believe they've said this.

Chapter 4 introduces modelling techniques for decomposing your applications, including:

- Data Flow Diagrams (DFDs) - modelling how data flows through your application to understand how parts can be attacked

- STRIDE - a way to categorize threats based on what risk they permit: spoofing, tampering of data, repudiation of actions, information disclosure, denial of service or escalation or privileges

- DREAD - a way of rating priority of threats based on damage potential, reproducibility, exploitability, affected users, and discoverability.

Cohen:

It also tells us that STRIDE and DREAD are the models of threats and consequences they use at Microsoft - which helps me to understand why they miss the boat so often. You need to get the book for more details because I need to cut down on the content of my review before we all run out of patience with it and the book. The book is almost 800 pages, by the way, and it does have a solid 40 pages of worthwhile content, not a bad bloat ratio for some software products.

Cohen seems to suggest that STRIDE and DREAD are inferior modelling techniques. Inferior to what? He proposes no alternative.

Cohen:

Chapter 4 is also very important to understanding where Microsoft still misses the boat in security. They didn't spend the time needed to do basic modeling and, as a result, their views and processes are incomplete, inconsistent, and lacking in a systematic approach.

It is unclear to me how a chapter explaining how to do basic modelling consistently and completely in a systematic fashion supports this view.

Cohen:

This is consistent with their expressed view of applying theory where appropriate - that you should never do so. That's part of why they will continue to make big stupid mistakes from time to time.

I don't believe they've said this.

Cohen on Howard: Chapter 3

Chapter 3 is on good security principles to live by.

Howard:

Cohen:

Nowhere in the text do the authors discourage backwards compatibility. They just point out that it is a major problem when insecure protocols are discovered. They propose a solution: make the newer protcols configurably backwards compatible, such that they can run in the less secure mode in a low-risk environment and in the more secure mode in a high-risk environment.

This is a common problem that people need to face. The book cites attacks on the SMB protocol that required a breaking change to the protocol. Other real-life examples exist as well: SSH1 had a design flaw and needed to be superceded by SSH2.

To say "the lack of backward compatability also implies that they didn't do it right in the first place and won't do it right now" is also incorrect. Sometimes protocols become weak due to unknown flaws in the underlying algorithms becoming known as they are cryptanalyzed by the research community, for example protocols which relied on MD4. At the time of creation, it may have been generally accepted to use these algorithms -- is it fair to say they didn't do it right in the first place? I doubt it.

Howard:

Cohen:

Nowhere does Howard say the most valuable part of a bank is the vault. Rather, he motivates the need for multiple layers of defense in computer programs by relating how other industries protect their assets. It's certainly true that access to the software and data controlling the management of the bank's money is very important -- but this is the very thing he's trying to motivate through his analogy.

Howard:

A protocol you designed is insecure in some manner. Five years and nine versions later, you make an update to the application with a more security protocol. However, the protocol is not backward compatible with the old version of the protocol, and any computer that has upgraded to the current protocl will no longer communicate with any other version of your application. The chances are slim indeed that your clients will upgrade their computers anytime soon, especially as some clients will still be using version 1, others version 2, and so on. Hence, the weak version of the protocol lives forever!

Cohen:

It tells us to remember that backward compatibility will always give you grief, which is why they discourage it. That's right, Microsoft does not want backward compatability, but we all knew that a long time ago. Of course the lack of backward compatability also implies that they didn't do it right in the first place and won't do it right now, or alternatively that there is no right, just eternal change. While this is a good business model, it is a poor information protection approach.

Nowhere in the text do the authors discourage backwards compatibility. They just point out that it is a major problem when insecure protocols are discovered. They propose a solution: make the newer protcols configurably backwards compatible, such that they can run in the less secure mode in a low-risk environment and in the more secure mode in a high-risk environment.

This is a common problem that people need to face. The book cites attacks on the SMB protocol that required a breaking change to the protocol. Other real-life examples exist as well: SSH1 had a design flaw and needed to be superceded by SSH2.

To say "the lack of backward compatability also implies that they didn't do it right in the first place and won't do it right now" is also incorrect. Sometimes protocols become weak due to unknown flaws in the underlying algorithms becoming known as they are cryptanalyzed by the research community, for example protocols which relied on MD4. At the time of creation, it may have been generally accepted to use these algorithms -- is it fair to say they didn't do it right in the first place? I doubt it.

Howard:

When was the last time you entered a bank to see a bank teller sitting on the floor in a huge room next to a massive pile of money. Never! To get to the big money in a bank requires that you get to the bank vault, which requires that you go through multiple layers of defense. Here are some examples of the defensive layers: [...cites examples of multiple layers of defense against vault robbery...]

Cohen:

They tell us that the most valuable part of a bank is its vault - did they miss the information age somewhere? They probably never worked on bank security. These days, the computers have far more value than the vaults (except the vaults that hold the computers of course).

Nowhere does Howard say the most valuable part of a bank is the vault. Rather, he motivates the need for multiple layers of defense in computer programs by relating how other industries protect their assets. It's certainly true that access to the software and data controlling the management of the bank's money is very important -- but this is the very thing he's trying to motivate through his analogy.

Cohen on Howard: Chapter 2

Chapter 2 describes how to weave security into the traditional software development lifecycle from start to end, including training.

Cohen:

At some level, secure software is risk management. As you secure software, you frequently make the software harder to use or less functional. Sometimes, you can get more security without sacrificing ease of use or functionality; but usually it's a balance that the authors of the code get to pick.

Howard:

Cohen:

In computer lore, words have very specific meaning. The Jargon File is a definitive lexicon for nerd speak.

trojan horse : a malicious security-breaking program that is disguised as something benign

easter egg: a message, graphic, or sound effect emitted by a program in response to some undocumented set of commands or keystrokes

Cohen's use of the very negative phrase "Trojan horse" almost suggests that his review is a troll (conveniently defined on the page after "Trojan horse" in the Jargon File).

Cohen:

Chapter 2 misses by so much it's not even funny. The authors give fundamental misimpressions, for example, that secure software is equivalent to risk management.

At some level, secure software is risk management. As you secure software, you frequently make the software harder to use or less functional. Sometimes, you can get more security without sacrificing ease of use or functionality; but usually it's a balance that the authors of the code get to pick.

Howard:

Don't add any ridiculous code to your application that gives a list of all the people who contributed to the application. If you don't have time to meet your schedule, how can you meet the schedule when you spend many hours working on an Easter egg? I have to admit that I wrote an Easter Egg in a former life, but it was not in the core product. It was in a sample application. I would not write an Easter Egg now, however, because I know that users don't need them and, frankly I don't have the time to write one!

Cohen:

They tell us that no more Trojan Horses will be allowed in Microsoft software. It took them long enough, they used to call them "Easter Eggs", a public relation stunt to make it seem palatable, and one that worked in the large for many years. But this is a good thing and I am glad they finally decided to do this.

In computer lore, words have very specific meaning. The Jargon File is a definitive lexicon for nerd speak.

trojan horse : a malicious security-breaking program that is disguised as something benign

easter egg: a message, graphic, or sound effect emitted by a program in response to some undocumented set of commands or keystrokes

Cohen's use of the very negative phrase "Trojan horse" almost suggests that his review is a troll (conveniently defined on the page after "Trojan horse" in the Jargon File).

Cohen on Howard: Chapter 1

Chapter 1 motivates the need for secure systems, and provides tips on how to convince your organization of the need.

Howard:

Cohen:

Cohen has a point: The introduction of networks is what caused the shift in focus in computer security, and, as the Internet is just one such network, Howard's point is technically incorrect. Is it misinformation? Hardly. That implies some malice or motive.

Howard:

Cohen:

It is unclear what Cohen draws on to support his statement about the poor internal culture at Microsoft -- indeed, the foreword to the book talks about the 2002 trustworthy computing directive from Bill Gates motivating the change in culture.

Howard:

Cohen:

Cohen introduces the phrase 'design basis', but doesn't define it -- though he does lament the lack of them in most software. He complains that the principles presented are not those he would present -- but doesn't offer his own principles.

Howard:

As the Internet grows in importance, applications are becoming highly interconnected. In the "good old days," computers were usually islands of functionality, with little, if any, interconnectivity. In those days, it didn't matter if your application was insecure -- the worst you could do was attack yourself -- and so long as an application performed its task successfully, most people didn't care about security.

Cohen:

The misinformation on the book starts in the 2nd sentence of the first paragraph of chapter 1 when we are told that security wasn't important before the Internet grew in importance and that the worst result would be that people could attack themselves. The first network-based global computer virus reached mainframes around the world in the late 1980s - and it did not involve the Internet. [emphasis mine]

Cohen has a point: The introduction of networks is what caused the shift in focus in computer security, and, as the Internet is just one such network, Howard's point is technically incorrect. Is it misinformation? Hardly. That implies some malice or motive.

Howard:

[...] So where do you begin instilling security in your organization? The best place is at the top, which can be hard work. It's difficult because you'll need to show a bottom-line impact to your company, and security is generally considered something that "gets in the way" and costs money while offering little or no financial return. Selling the idea of building secure products to management requires tact and sometimes requires subversion. Let's look at each approach.

Cohen:

Indeed, chapter 1 demonstrates just how poor the internal culture at Microsoft is. Such awareness activities as getting the boss to send an email and nominating an evangelist are really not about writing secure code as much as internally about convincing Microsoft to do what you want. It is valuable for salespeople no doubt.

It is unclear what Cohen draws on to support his statement about the poor internal culture at Microsoft -- indeed, the foreword to the book talks about the 2002 trustworthy computing directive from Bill Gates motivating the change in culture.

Howard:

Principle #1: The defender must defend all points; the attacker can choose the weakest point.

Principle #2: The defender can defend only against known attacks; the attacker can probe for unknown vulnerabilities.

Principle #3: The defender must be constantly vigilant; the attacker can strike at will.

Principle #4: The defender must play by the rules; the attacker can play dirty.

Cohen:

The lack of a basis for design shows up in Chapter 1 and it permeates the book. The four principles selected in the book are hardly what I would choose, but then I was not choosing. "Principle 2 - the defender can defend only against known attacks; the attacker can probe for unknown vulnerabilities" - an excellent example of why you need a design basis and how the lack of one leads you down the wrong path. "Principle 4 - The defender must play by the rules; that attacker can play dirty!" - what rules are those? My view is that this lack of a design basis, the lack of deep understanding of issues and a way to approach dealing with them, and the lack of a theory and a practice underlies the problem that Microsoft and much of the current programming community has with writing secure code. This book does nothing to solve these problems.

Cohen introduces the phrase 'design basis', but doesn't define it -- though he does lament the lack of them in most software. He complains that the principles presented are not those he would present -- but doesn't offer his own principles.

Cohen on Howard: Introduction

Fred Cohen is a well-respected computer science researcher and professor in the security field. Michael Howard and David LeBlanc are well-respected software engineers in the security field.

They wrote Writing Secure Code, a book on computer programming security.

Cohen wrote a review of the book.

The review is posted on the IEEE Computer Society's Technical Committee on Security and Privacy website. That's a mouthful -- but it's also one of the most respected institutions in software engineering.

The review is 5,400 words long. It analyzes the book chapter by chapter and finishes with a suggestion for what the book ought to have been called:

The software engineering profession is often criticized for a perceived lack of rigor and professionalism. Read the review. Analyze it in context: one researcher criticizing another researcher's work, published on an electronic journal read by their peers. Doesn't say much for software engineering, does it?

This is the first of a series of blog posts. I am going to go through Cohen's review, chapter-by-chapter, and give the point-of-view of a 4th year software engineering student, who has interned at Microsoft on

I actually received a free copy of the book when I joined Microsoft. It is also a required text for one of my school courses. When you read my commentary, remember my biases, and also remember my youth -- I am criticizing a man who has been in the field for 30 years. If I can find so many questionable points in his review, what does that say about our field?

They wrote Writing Secure Code, a book on computer programming security.

Cohen wrote a review of the book.

The review is posted on the IEEE Computer Society's Technical Committee on Security and Privacy website. That's a mouthful -- but it's also one of the most respected institutions in software engineering.

The review is 5,400 words long. It analyzes the book chapter by chapter and finishes with a suggestion for what the book ought to have been called:

"How poor quality programmers at Microsoft have produced hundreds of instances of the same 10 big mistakes in their code, and how they can do their jobs a little bit better".

The software engineering profession is often criticized for a perceived lack of rigor and professionalism. Read the review. Analyze it in context: one researcher criticizing another researcher's work, published on an electronic journal read by their peers. Doesn't say much for software engineering, does it?

This is the first of a series of blog posts. I am going to go through Cohen's review, chapter-by-chapter, and give the point-of-view of a 4th year software engineering student, who has interned at Microsoft on

- a Trustworthy Computing team, working on the same team as David LeBlanc

- the Microsoft CardSpace team, working on Microsoft's digital identity and privacy software

I actually received a free copy of the book when I joined Microsoft. It is also a required text for one of my school courses. When you read my commentary, remember my biases, and also remember my youth -- I am criticizing a man who has been in the field for 30 years. If I can find so many questionable points in his review, what does that say about our field?

Tuesday, March 20, 2007

ATEs at Waterloo

Thanks, Waterloo!

From the short list of courses that meet the ATE requirements for me to graduate intersected with the set offered this semester complemented with the times of core SE courses, there are three courses I want/can take:

* ECE 493: Special Topics in ECE, 7th offering: Security

* ECE 454: Distributed Systems

* CS 452: Real-Time Operating Systems (The "Trains" Course)

The ECE courses require course overrides because I'm an SE student, and the CS course has only 6 spots for SE students (but 34 for CS -- even though at present there are only 6 CS students signed up for it).

What a treat! I knew this is why I signed up for SoftEng at UW!

From the short list of courses that meet the ATE requirements for me to graduate intersected with the set offered this semester complemented with the times of core SE courses, there are three courses I want/can take:

* ECE 493: Special Topics in ECE, 7th offering: Security

* ECE 454: Distributed Systems

* CS 452: Real-Time Operating Systems (The "Trains" Course)

The ECE courses require course overrides because I'm an SE student, and the CS course has only 6 spots for SE students (but 34 for CS -- even though at present there are only 6 CS students signed up for it).

What a treat! I knew this is why I signed up for SoftEng at UW!

Thursday, March 15, 2007

I hate you Comcast

Do you want my money or not?!

Last time it was: "Unknown error. Call 1-800-COMCAST."

This time it's: "Unable to localize to your account at this time. Please try again later."

What the hell do you have to localize?! Your site is English, I speak English. NO LOCALIZATION NECESSARY! GRRRRR!

Last time it was: "Unknown error. Call 1-800-COMCAST."

This time it's: "Unable to localize to your account at this time. Please try again later."

What the hell do you have to localize?! Your site is English, I speak English. NO LOCALIZATION NECESSARY! GRRRRR!

Tuesday, March 13, 2007

Entropy

At Payless Shoesource:

Twitch, twitch.

This reminds me of the time I phoned NET10 tech support.

"Phone number, please," says the clerk at Payless Shoes to my housemate, Sarah.

"Umm, why do you need my phone number?"

"It's so we can track how well shoes sell at given stores."

Me now, "That makes no sense. Can you just type in 10 random digits?"

"Err, yeah, I could. But, really, given chaos theory, is anything really random?"

Well, actually, yes. "Well, actually, yes. The interarrival time of radioactive decay events are random." And from what little I know, I think chaos theory just says that some things that appear random, aren't random.

"Oh, but it just looks that way because the sample size is small. Taken over infinity, patterns would start to occur."

Twitch, twitch.

This reminds me of the time I phoned NET10 tech support.

"Can we have your email address, sir?"

"Why?"

"It's so we can email you advertisements about the product."

"I don't want to give you my email address, then."

"You don't have an email address?"

"No, I just don't want to give it to you so you can spam me."

"You don't have an email address?"

"That's correct."

"And your birthdate, sir?"

"June 14, 1911."

"Thank you."

Wednesday, March 07, 2007

Frugality

Why don't I get a haircut? Why don't I have a cell phone? Why don't I have pants that aren't 3+ years old and full of holes?

Because I'm cheap.

Apparently, it's a common affliction in CS types. An acquaintance who works at Google finally upgraded her TV after getting maximum value out of the old one.

Because I'm cheap.

Apparently, it's a common affliction in CS types. An acquaintance who works at Google finally upgraded her TV after getting maximum value out of the old one.

Monday, March 05, 2007

Standing water

The insurance tags on my long-term rental from Avis just expired.

So I got it replaced, and now me and my housemates are the -- er, proud? -- drivers of a Chevy HHR.

This car feels like such a piece of garbage. It is a study in how now to do user interfaces.

Things are in all the wrong places. The driver's drinkholder is well behind his seat. The window controls are at about calf level. The most prominent dial on the instrument panel is the tachometer.

Oh, and the glove box is full of fetid water. That might be factory default, might be the performance package; I didn't ask.

So I got it replaced, and now me and my housemates are the -- er, proud? -- drivers of a Chevy HHR.

This car feels like such a piece of garbage. It is a study in how now to do user interfaces.

Things are in all the wrong places. The driver's drinkholder is well behind his seat. The window controls are at about calf level. The most prominent dial on the instrument panel is the tachometer.

Oh, and the glove box is full of fetid water. That might be factory default, might be the performance package; I didn't ask.

Agape

This past Saturday, I learned the true meaning of the word agape. And I don't mean the brotherly love kind.

Since coming to Seattle, I've heard a great deal about the local strip clubs. There's a war against adult entertainment going on, they say. No alcohol can be served - and this is just the first sortie. If those upright folk in City Hall get their way, strip clubs will be brightly lit, strippers will be behind a railing several feet away from the closest patron, tipping will be illegal, etc, etc.

Clearly, I had to experience this before it got legislated out of existence or I crossed into the land of the betrothed.

Thus, it was after obtaining business sign off from our significant others that Simon, Dan and I trotted off to Showgirls on Seattle's 1st Avenue.

Giddy on the naughtiness of it all, we seated ourselves and awaited untold dark, erotic adventures. As it turned out, we'd have a long time to wait and the adventures would remain untold.

Each dancer seemed to have about 90 seconds on stage to do her bit. Sex isn't hot when you're rushing to beat the clock -- turns out, stripping's the same way. It was a race to kick off the clothes, avoid making eye contact with the patrons, and get the hell off the stage. I couldn't help but compare it to Can Can, where parts of the cabaret-style entertainment were much, much more sensual. (Oh, and forget the no-touching rule: at Can Can, they invite you up on the stage to dance and get up close and personal. Granted, the person whose behind I had the unique opportunity to knead was a bit sweaty. And hairy. And male. But I digress.)

Each dancer seemed to have about 90 seconds on stage to do her bit. Sex isn't hot when you're rushing to beat the clock -- turns out, stripping's the same way. It was a race to kick off the clothes, avoid making eye contact with the patrons, and get the hell off the stage. I couldn't help but compare it to Can Can, where parts of the cabaret-style entertainment were much, much more sensual. (Oh, and forget the no-touching rule: at Can Can, they invite you up on the stage to dance and get up close and personal. Granted, the person whose behind I had the unique opportunity to knead was a bit sweaty. And hairy. And male. But I digress.)

I get the feeling that there were only two folks at Showgirls who got their money's worth: Dan, who took a nap and a rather dimwitted-looking fellow in the front row who seemed unable to close his mouth for the duration of the time we were there.

PS: Kudos to Rodney, who, when informed that the plans for the evening included a strip club, simply said "no thanks, I don't agree with that and I don't want to do that". And congrats on your job offer!

Since coming to Seattle, I've heard a great deal about the local strip clubs. There's a war against adult entertainment going on, they say. No alcohol can be served - and this is just the first sortie. If those upright folk in City Hall get their way, strip clubs will be brightly lit, strippers will be behind a railing several feet away from the closest patron, tipping will be illegal, etc, etc.

Clearly, I had to experience this before it got legislated out of existence or I crossed into the land of the betrothed.

Thus, it was after obtaining business sign off from our significant others that Simon, Dan and I trotted off to Showgirls on Seattle's 1st Avenue.

Giddy on the naughtiness of it all, we seated ourselves and awaited untold dark, erotic adventures. As it turned out, we'd have a long time to wait and the adventures would remain untold.

Each dancer seemed to have about 90 seconds on stage to do her bit. Sex isn't hot when you're rushing to beat the clock -- turns out, stripping's the same way. It was a race to kick off the clothes, avoid making eye contact with the patrons, and get the hell off the stage. I couldn't help but compare it to Can Can, where parts of the cabaret-style entertainment were much, much more sensual. (Oh, and forget the no-touching rule: at Can Can, they invite you up on the stage to dance and get up close and personal. Granted, the person whose behind I had the unique opportunity to knead was a bit sweaty. And hairy. And male. But I digress.)

Each dancer seemed to have about 90 seconds on stage to do her bit. Sex isn't hot when you're rushing to beat the clock -- turns out, stripping's the same way. It was a race to kick off the clothes, avoid making eye contact with the patrons, and get the hell off the stage. I couldn't help but compare it to Can Can, where parts of the cabaret-style entertainment were much, much more sensual. (Oh, and forget the no-touching rule: at Can Can, they invite you up on the stage to dance and get up close and personal. Granted, the person whose behind I had the unique opportunity to knead was a bit sweaty. And hairy. And male. But I digress.)I get the feeling that there were only two folks at Showgirls who got their money's worth: Dan, who took a nap and a rather dimwitted-looking fellow in the front row who seemed unable to close his mouth for the duration of the time we were there.

PS: Kudos to Rodney, who, when informed that the plans for the evening included a strip club, simply said "no thanks, I don't agree with that and I don't want to do that". And congrats on your job offer!

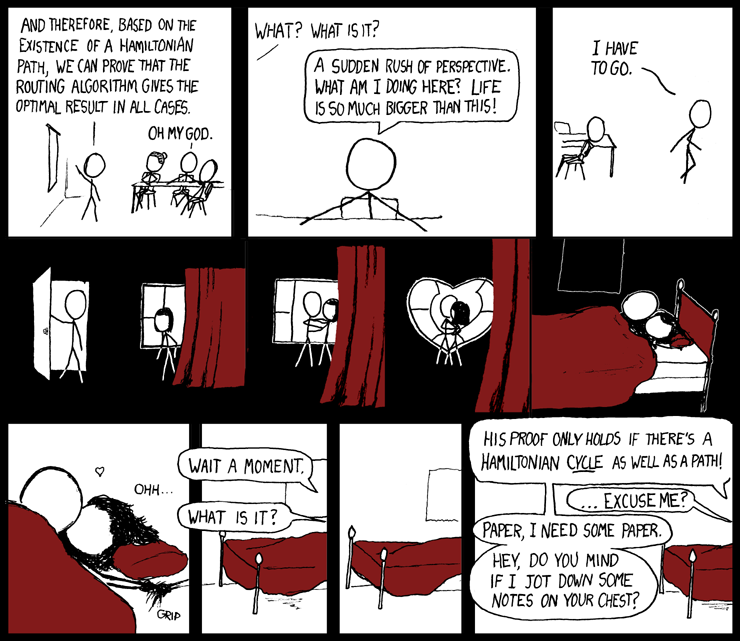

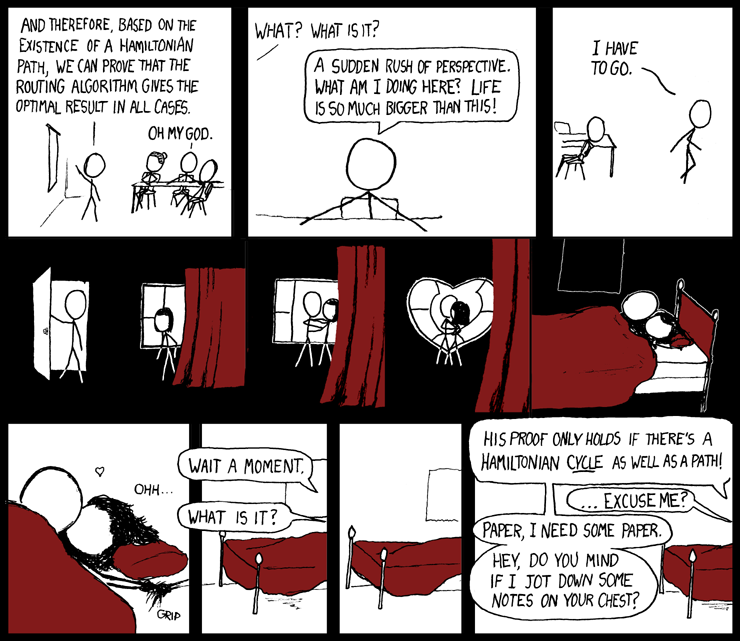

Karatsuba

I'm pretty sure I owe Jenn an apology for something like this when I was in CS 134 and implementing a class that could handle arbitrarily large integers with the common operations (addition, subtraction, multiplication, division, exponentiation).

I wanted to implement Karatsuba multiplication. It's marginally faster than gradeschool long multiplication. Yup, you heard right: marginally faster. And there was no bonus marks to be had. And hell, implementing multiplication was only worth like .1% of your final mark. And I was trying to optimize *JAVA*. Definitely a missing-the-forest-for-the-trees moment. I didn't end up seeing the results I expected, so fine, I said, fuck it!

And I went on to other pursuits.

But then... like the crescendo of a great concerto, the explosion of a whole sky's worth of fireworks and the crash of thunder all at once, it became clear to me: I was being sloppy with object creation in a tight loop!

And I think I also owe Jenn similar apologies for SE 141 (fastest clock rate on the Xilinx boards for project #3), CS 241 (removing the arbitrary restriction on the number of local variables supported by my implementation of SL) and ECE 354 (corner case where A5 gets destroyed on the MCF5307 when context switching).

Side note: Jenn and I had an epiphany after seeing a bike store on Hamilton Street in Vancouver. If we ever live in Hamilton, we will dispense with our cumulative 8 years of study of math and engineering to be the proprietors of a bike shop named the Hamiltonian Cycle.

I wanted to implement Karatsuba multiplication. It's marginally faster than gradeschool long multiplication. Yup, you heard right: marginally faster. And there was no bonus marks to be had. And hell, implementing multiplication was only worth like .1% of your final mark. And I was trying to optimize *JAVA*. Definitely a missing-the-forest-for-the-trees moment. I didn't end up seeing the results I expected, so fine, I said, fuck it!

And I went on to other pursuits.

But then... like the crescendo of a great concerto, the explosion of a whole sky's worth of fireworks and the crash of thunder all at once, it became clear to me: I was being sloppy with object creation in a tight loop!

And I think I also owe Jenn similar apologies for SE 141 (fastest clock rate on the Xilinx boards for project #3), CS 241 (removing the arbitrary restriction on the number of local variables supported by my implementation of SL) and ECE 354 (corner case where A5 gets destroyed on the MCF5307 when context switching).

Side note: Jenn and I had an epiphany after seeing a bike store on Hamilton Street in Vancouver. If we ever live in Hamilton, we will dispense with our cumulative 8 years of study of math and engineering to be the proprietors of a bike shop named the Hamiltonian Cycle.

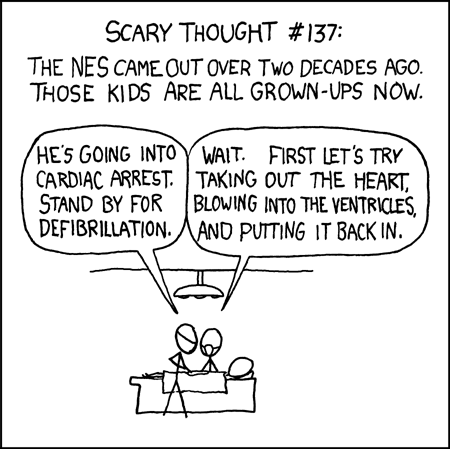

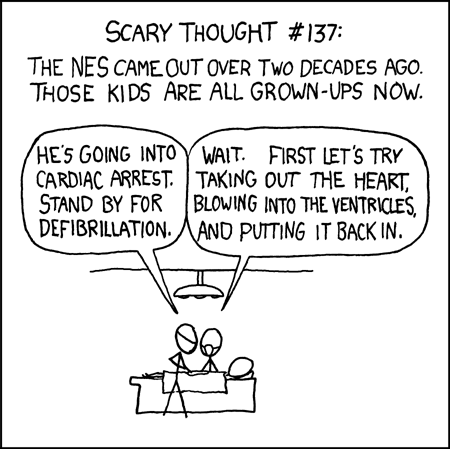

Surgeons in a Half-Shell

A friend of mine commented a couple years ago that we're going to be flooded with Hollywood remakes of the shows of our childhoods, since the kids who enjoyed the shows back then are now the artists producing the movies.

I guess medical school takes longer than film school, since just last week the xkcd guy produced this gem:

I guess medical school takes longer than film school, since just last week the xkcd guy produced this gem:

Why I don't have a cellphone

My parents yell at me because I don't have a phone.

I tell them it's because cell phones are overpriced monstrosities, VOIP phones don't work and landlines charge outrageous installation fees for people who move around every 4 months.

In reality, it's because all these giant megacorps don't understand the Internet.

I mean, hey, it's only been around since '69. I begin to doubt how Telus knows that "the future is friendly" when they're still trying to catch up to the events of the 1960s. Hell, computers don't even remember what 69 is anymore. Because of the Y2K problem, if you told Telus's mainframe that you were born in "69", it'd think you were born in 2069. It probably wouldn't let you open an account and that would be a blessing in disguise.

Because, you see, with every account comes the bill. Every month.

Let's see here... that's potentially 1 internet bill, 1 cable bill, 1 phone bill, 1 hydro bill, 1 rent bill, 1 water/sewage bill, and 1 furniture rental bill, all of which need to be paid for every abode.

And if you're a lucky co-op student (what sort of interview is that smiling, squatting student preparing for? Porcelain Receptable Quality Assurance Engineer?), you have two houses. In different countries, too, where the megacorps involved are different, so you can't even consolidate bills for the same services.

Each month, I am either the primary recipient or co-recipient of 8 bills. And no-one uses the same billing cycle, so every 4 days, I get a new bill.

I'm happy to delegate the authority to dip into my bank accounts in the US or Canada. Despite working for a team that wants to make Internet transactions secure and traceable, I'm happy to release the keys to the kingdom. I'm happy to say, "Checking acct #602-1481-4, transit 1085, PIN 5152. Enjoy!"

But the megacorps's websites all seem to have the reliability of an emo, teenaged McDonald's worker. "Error #1048: Please call 1-800-COMCAST." "Sorry, PSE is down for scheduled maintenance!" "Cannot find tenant account." "The balance due for Rogers customer 600194018, as of Mar 5, is NOT AVAILABLE." "Can't validate your identity."

Fine, some of these are transient problems. But Rogers and Comcast -- ironically, both Internet service providers -- reliably fuck up.

For me, when deciding which services to subscribe to, I balance the high cost of remitting payment against the service's usefulness. Having a place to sleep? Sure. Being able to take a crap? Sure. Being able to take a call while doing either of these? Nah.

So, mom and dad, that's why I don't have a cell phone.

PS: Comcast, how can you have the gall to charge a $129 activation fee for VOIP service? Norrmally, I don't care about the one-time fees. It's the recurring fees I hate. But an activation fee for an Internet-based service?!

Let's see... if I remember my databases course rightly, that's something like...

INSERT INTO voip_customers VALUES ('Colin Dellow');

What is that, $2.30 per keystroke? At least with traditional phone service I can envision my beefy activation fee producing a hearty *THUNK* as a lever is pulled somewhere and the vacuum tubes are linked together.

I tell them it's because cell phones are overpriced monstrosities, VOIP phones don't work and landlines charge outrageous installation fees for people who move around every 4 months.

In reality, it's because all these giant megacorps don't understand the Internet.

I mean, hey, it's only been around since '69. I begin to doubt how Telus knows that "the future is friendly" when they're still trying to catch up to the events of the 1960s. Hell, computers don't even remember what 69 is anymore. Because of the Y2K problem, if you told Telus's mainframe that you were born in "69", it'd think you were born in 2069. It probably wouldn't let you open an account and that would be a blessing in disguise.

Because, you see, with every account comes the bill. Every month.

Let's see here... that's potentially 1 internet bill, 1 cable bill, 1 phone bill, 1 hydro bill, 1 rent bill, 1 water/sewage bill, and 1 furniture rental bill, all of which need to be paid for every abode.

And if you're a lucky co-op student (what sort of interview is that smiling, squatting student preparing for? Porcelain Receptable Quality Assurance Engineer?), you have two houses. In different countries, too, where the megacorps involved are different, so you can't even consolidate bills for the same services.

Each month, I am either the primary recipient or co-recipient of 8 bills. And no-one uses the same billing cycle, so every 4 days, I get a new bill.

I'm happy to delegate the authority to dip into my bank accounts in the US or Canada. Despite working for a team that wants to make Internet transactions secure and traceable, I'm happy to release the keys to the kingdom. I'm happy to say, "Checking acct #602-1481-4, transit 1085, PIN 5152. Enjoy!"

But the megacorps's websites all seem to have the reliability of an emo, teenaged McDonald's worker. "Error #1048: Please call 1-800-COMCAST." "Sorry, PSE is down for scheduled maintenance!" "Cannot find tenant account." "The balance due for Rogers customer 600194018, as of Mar 5, is NOT AVAILABLE." "Can't validate your identity."

Fine, some of these are transient problems. But Rogers and Comcast -- ironically, both Internet service providers -- reliably fuck up.

For me, when deciding which services to subscribe to, I balance the high cost of remitting payment against the service's usefulness. Having a place to sleep? Sure. Being able to take a crap? Sure. Being able to take a call while doing either of these? Nah.

So, mom and dad, that's why I don't have a cell phone.

PS: Comcast, how can you have the gall to charge a $129 activation fee for VOIP service? Norrmally, I don't care about the one-time fees. It's the recurring fees I hate. But an activation fee for an Internet-based service?!

Let's see... if I remember my databases course rightly, that's something like...

INSERT INTO voip_customers VALUES ('Colin Dellow');

What is that, $2.30 per keystroke? At least with traditional phone service I can envision my beefy activation fee producing a hearty *THUNK* as a lever is pulled somewhere and the vacuum tubes are linked together.